TetraDiffusion: Tetrahedral Diffusion Models

for 3D Shape Generation

1 ETH Zürich 2 University of Zürich

* equal contribution

1 ETH Zürich 2 University of Zürich

* equal contribution

TetraDiffusion operates on a tetrahedral partitioning of 3D space to enable efficient, high-resolution 3D shape generation. Our model introduces operators for convolution and transpose convolution that act directly on the tetrahedral partition, and seamlessly includes additional attributes such as color. It enables rapid sampling of detailed 3D objects in nearly real-time with unprecedented resolution, works on standard consumer hardware, and delivers superior results.

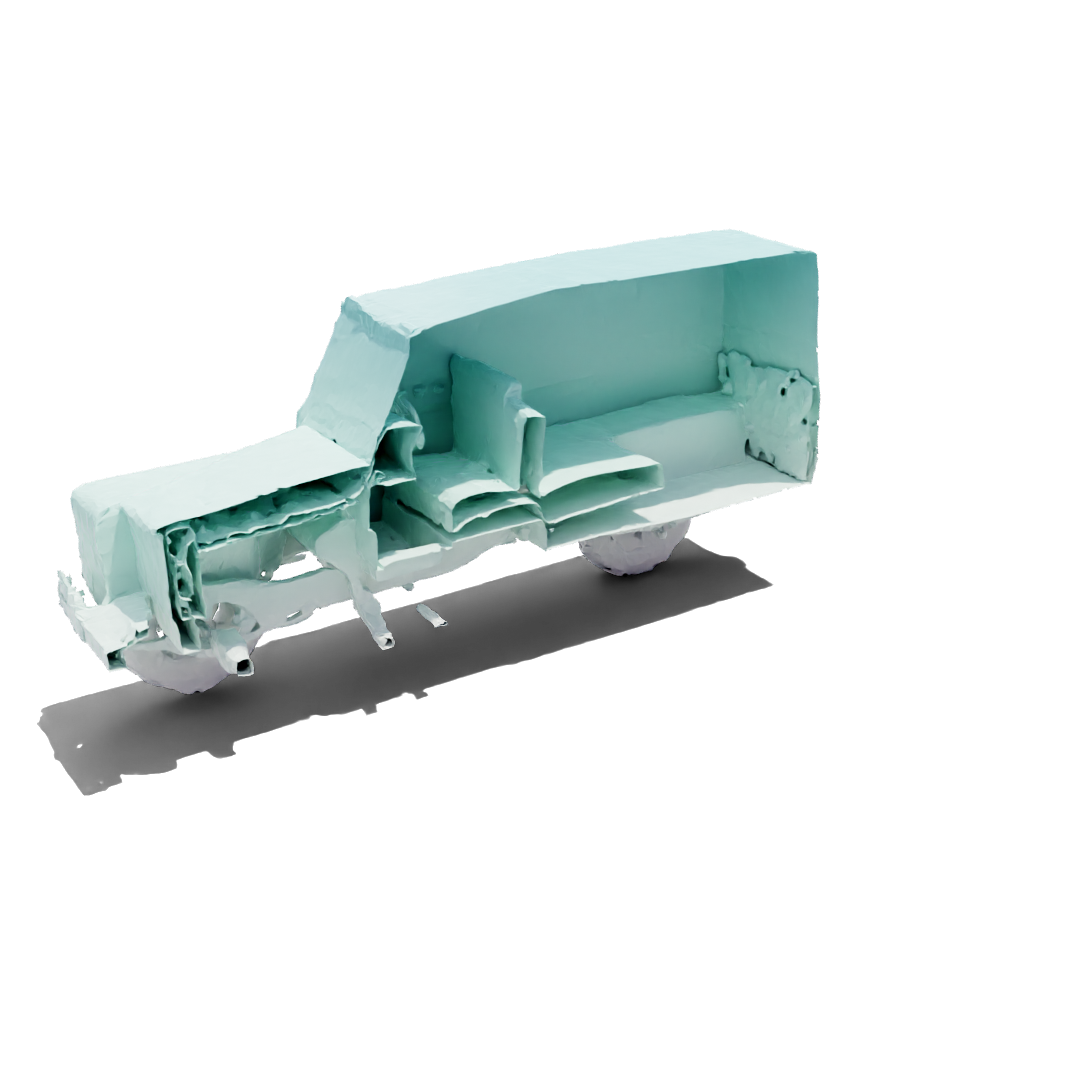

All meshes are shown without any postprocessing, hole-filling or smoothing. Airplanes, bikes and cars are from our high resolution model, chairs from our standard model.

Probabilistic denoising diffusion models (DDMs) have set a new standard for 2D image generation. Extending DDMs for 3D content creation is an active field of research. Here, we propose TetraDiffusion, a diffusion model that operates on a tetrahedral partitioning of 3D space to enable efficient, high-resolution 3D shape generation. Our model introduces operators for convolution and transpose convolution that act directly on the tetrahedral partition, and seamlessly includes additional attributes such as color. Remarkably, TetraDiffusion enables rapid sampling of detailed 3D objects in nearly real-time with unprecedented resolution. It's also adaptable for generating 3D shapes conditioned on 2D images. Compared to existing 3D mesh diffusion techniques, our method is up to 200 times faster in inference speed, works on standard consumer hardware, and delivers superior results.

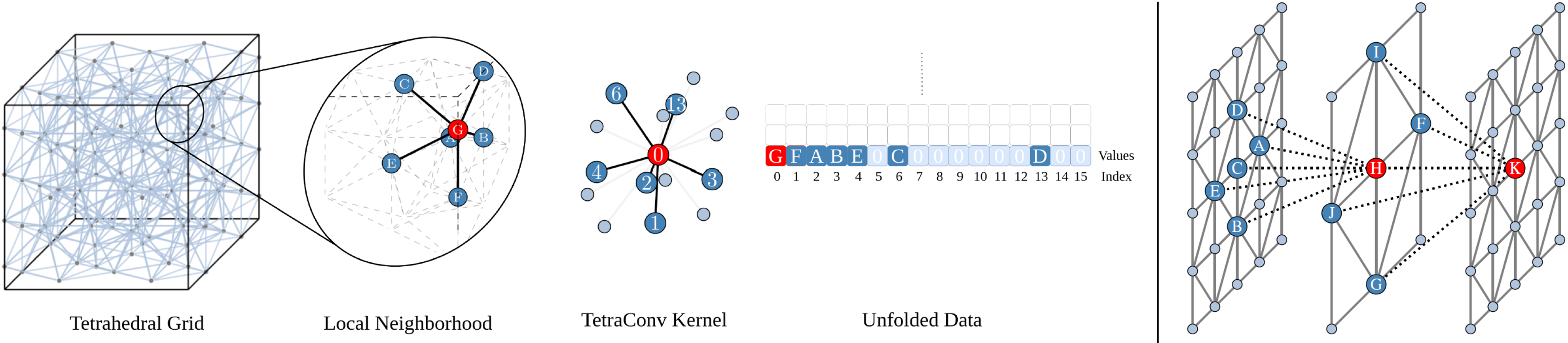

The project introduces a novel approach to 3D convolution called "Tetra Convolution", designed to work with displacement and signed distance fields in a tetrahedral grid. Unlike regular 3D convolutions that struggle with varying neighborhoods and connectivity, Tetra Convolution uses the tetrahedral A15 lattice structure for collision-free spatial ordering of vertices. This unique method outperforms traditional graph convolutions and eliminates the need for searching points under the kernel as in KPConv. It employs a discrete binning process for local edge orientations to achieve efficient collision-free ordering, resulting in a significantly smaller basis of reference directions compared to 3D voxel convolutions (16 vs 27). The TetraConv layer computes a weighted sum over each vertex and its neighborhood, accounting for varying neighborhood sizes with zero padding. This structure enables flexible down- and upsampling on the tetrahedral grid, essential for convolutional learning. Additionally, the project introduces "Grid Pruning", a technique to efficiently prune the tetrahedral grid, which is typically sparsely populated. This method allows for targeted deletion of unused vertices and connections, leading to optimized memory and speed for training and inference.

The project introduces a novel approach to 3D convolution called "Tetra Convolution", designed to work with displacement and signed distance fields in a tetrahedral grid. Unlike regular 3D convolutions that struggle with varying neighborhoods and connectivity, Tetra Convolution uses the tetrahedral A15 lattice structure for collision-free spatial ordering of vertices. This unique method outperforms traditional graph convolutions and eliminates the need for searching points under the kernel as in KPConv. It employs a discrete binning process for local edge orientations to achieve efficient collision-free ordering, resulting in a significantly smaller basis of reference directions compared to 3D voxel convolutions (16 vs 27). The TetraConv layer computes a weighted sum over each vertex and its neighborhood, accounting for varying neighborhood sizes with zero padding. This structure enables flexible down- and upsampling on the tetrahedral grid, essential for convolutional learning. Additionally, the project introduces "Grid Pruning", a technique to efficiently prune the tetrahedral grid, which is typically sparsely populated. This method allows for targeted deletion of unused vertices and connections, leading to optimized memory and speed for training and inference.

Finally, the project presents TetraDiffusion, a process for diffusing features like deformation vectors and color directly in the tetrahedral space. The network integrates standard diffusion model architecture with tetrahedral convolution operators, group normalization, SiLU activations, and attention layers. This approach promises more efficient and effective processing of 3D data, especially in applications involving complex spatial relationships.

| Training | Inference | |||

|---|---|---|---|---|

| Method | GPU (GB) | Speed (it/s) | GPU (GB) | Speed (s/shape) |

| GET3D [1] | 13.3 | 0.10 | 11.3 | 0.83 |

| MeshDiffusion [2] | 76.6 | 0.5 | 29.2 (22.6†) | 714.3 (526.3†) |

| Ours* | 12.0 | 2.8 | 7.4 | 3.4 |

| Ours | 20.8 | 1.0 | 9.7 | 11.2 |

| Ourshr* | 20.9 | 1.2 | 11.7 | 9.1 |

| Ourshr | 78.2 | 0.3 | 42.1 | 33.3 |

| Memory consumption and computing time of different generative shape models. † We implement 16-bit inference in MD for fair comparison. Our methods labelled with a * are pruned versions, highlighting the efficiency boost of our tetrahedral formulation. | ||||

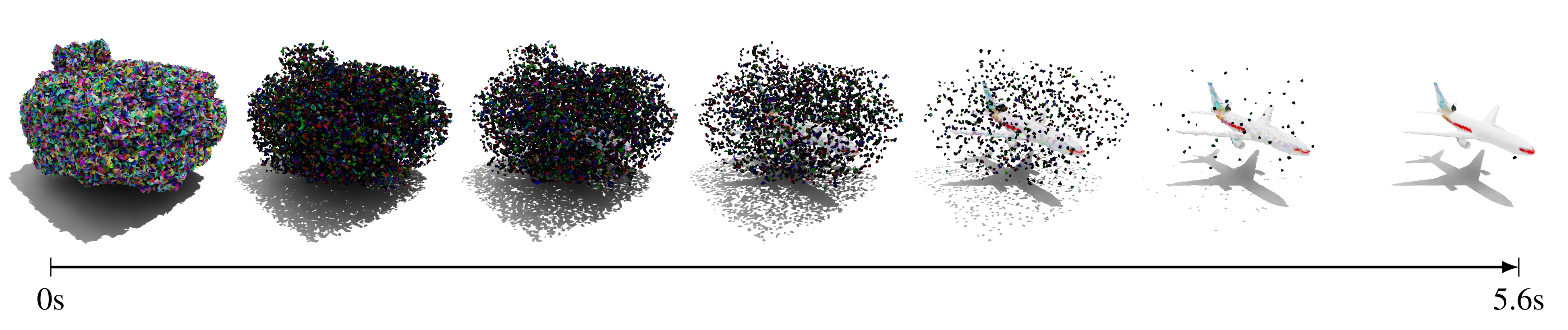

We can directly interpolate between two different shape instances using spherical interpolation to blend from start to end noise.

Our efficient architecture is capable of generating meshes in unprecedented resolution, capturing intricate details such as chains, fine rims, brake disks and brake handles. Importantly, these details are represented in the geometry of the mesh and not in texture maps. Diffusing per vertex texture information enforces the strong geometry.

Hover over the images to enable magnifying glass or tap the image on mobile.

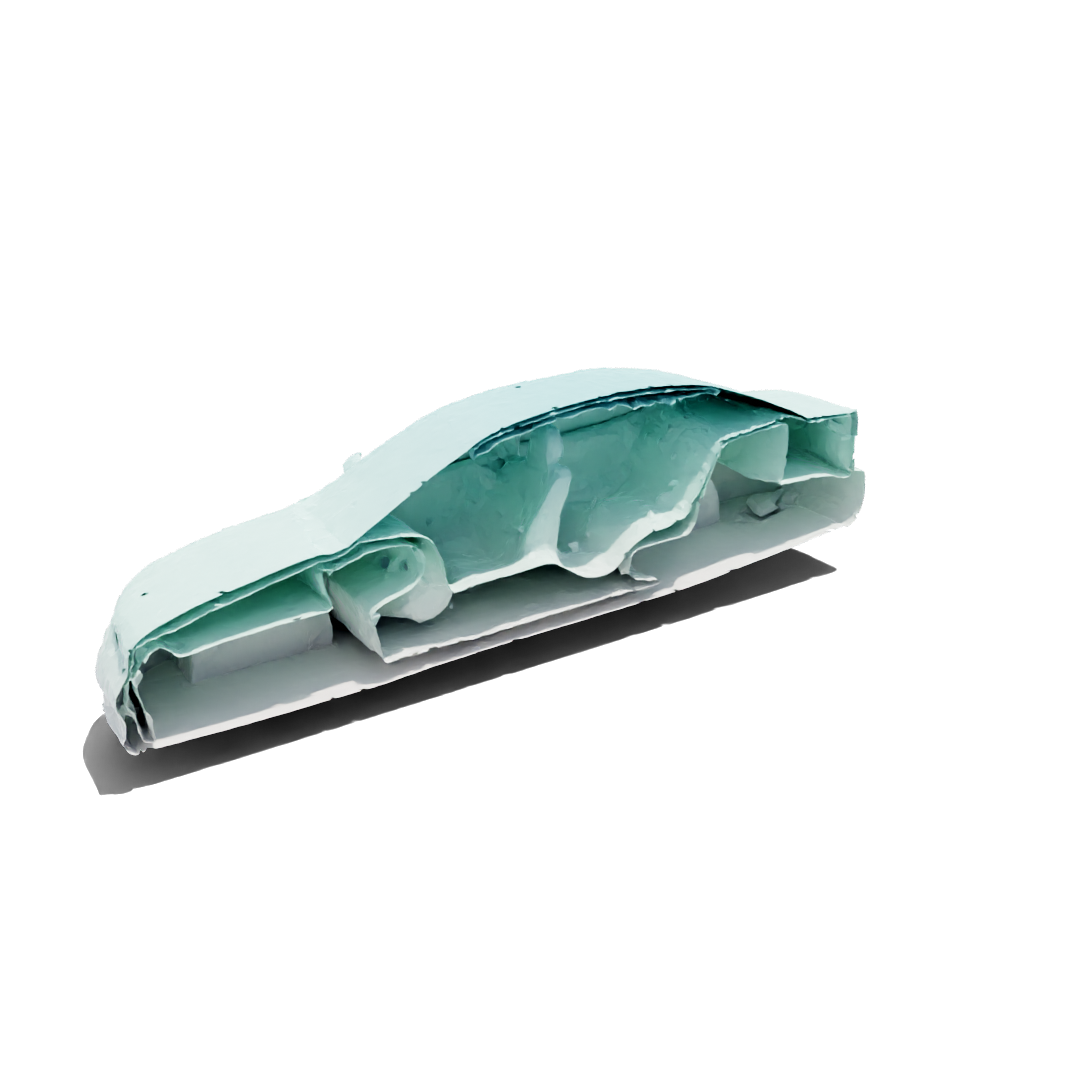

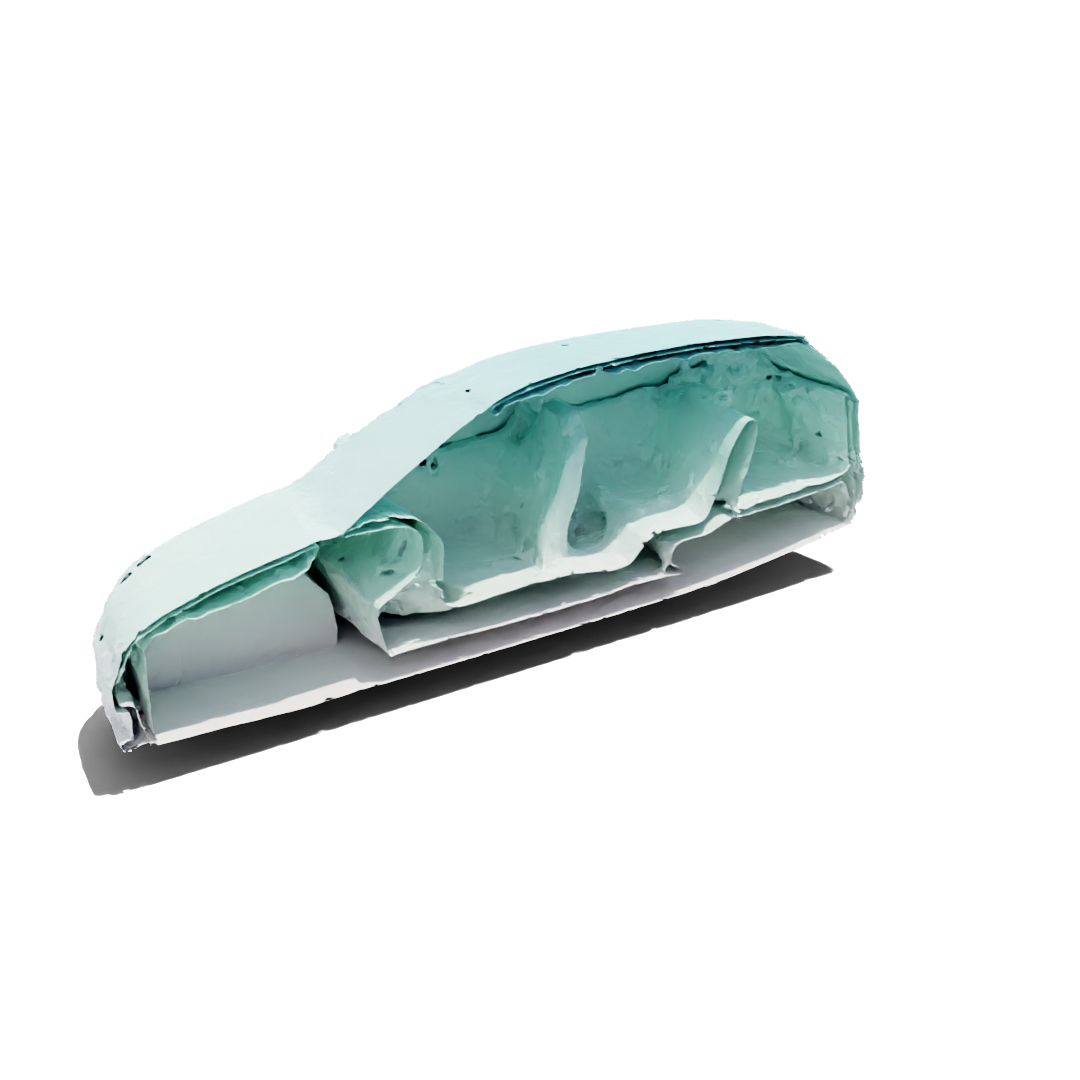

Our models are able to generate consistent inner structure, e.g. seats, steering wheels or engines.

[1] Gao, Jun, et al. "Get3d: A generative model of high quality 3d textured shapes learned from images." Advances In Neural Information Processing Systems 35 (2022): 31841-31854.

[2] Liu, Zhen, et al. "MeshDiffusion: Score-based Generative 3D Mesh Modeling." The Eleventh International Conference on Learning Representations. 2022.

@article{kalischek2023tetradiffusion,

title={TetraDiffusion: Tetrahedral Diffusion Models for 3D Shape Generation},

author={Kalischek, Nikolai and Peters, Torben and Wegner, Jan D and Schindler, Konrad},

journal={arXiv preprint arXiv:2211.13220},

year={2023}

}